Keyword [Shufflenet]

Zhang X, Zhou X, Lin M, et al. Shufflenet: An extremely efficient convolutional neural network for mobile devices[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 6848-6856.

1. Overview

1.1. Motivation

- the limitation of computing power on mobiledevices

- costy dense 1x1 convolutions

In this paper, it proposed ShuffleNet

- pointwise group convolution

- Depthwise convolution

- channel shuffle.

(a) output from a certain channel are only derived from a small fraction of input channel. block information flow between channel group and weakens representation

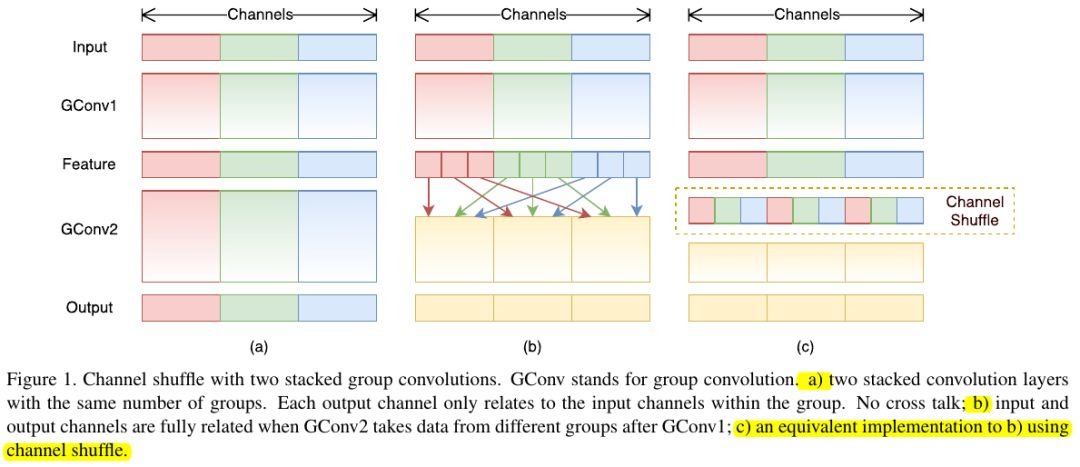

1.2. Channel Shuffle

for a gn (group, number of each group) feature map

- reshape to gxn

- transpose nxg

- reshape to ng

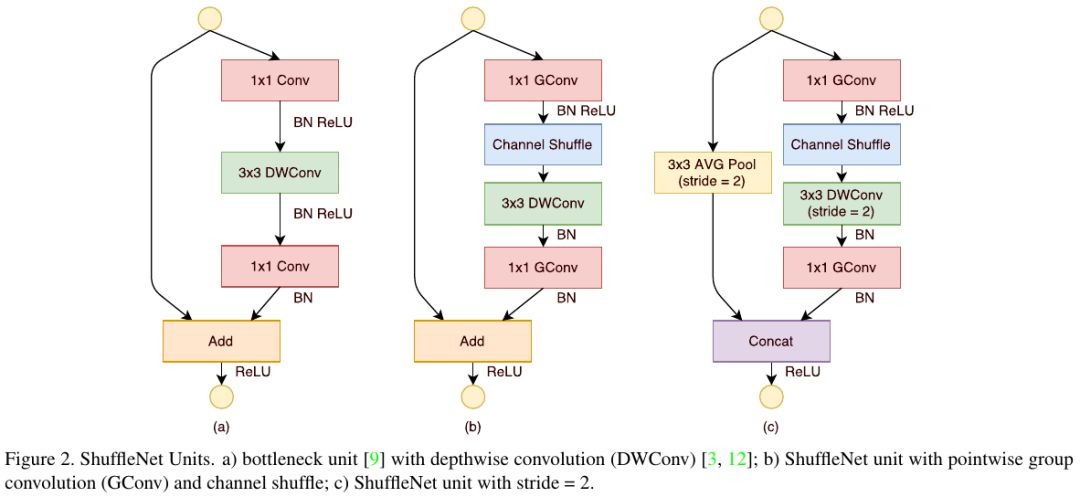

1.3. ShuffleNet Unit

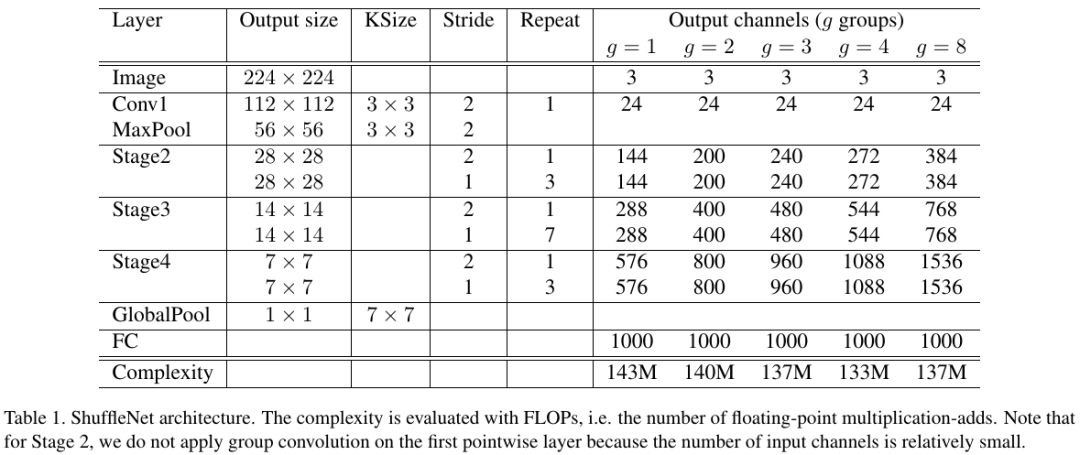

1.4. Architecture

1.5. Unit Comparison

for a point of c channels feature map, m channels of bottleneck

- ResNet. 2cm + 9mm

- ResNeXt. 2cm + 9mm/g

- ShuffleNet. 2cm/g + 9m

ShuffleNet apply group convolution to two 1x1 pointwise convolution.

1.6. Related Work

1.6.1. Model

- GoogleNet

- SqueezeNet

- SENet

- NASNet

1.6.2. Group Convolution

- AlexNet. 50% kernel on first GPU, 50% on second GPU

- ResNeXt

- Xception. depthwise

- MobileNet. depthwise

1.6.3. Channel Shuffle Operation

- cuda-convnet. random sparse convolution layer, equivalent to random channel shuffle + group Conv

1.6.4. Model Acceleration

- Pruning connection

- Channel reduction

- Quantization

- Factorization

- Implement convolution by FFT

- Distillation

- PVANET

2. Experiments

2.1. Hyperparameter

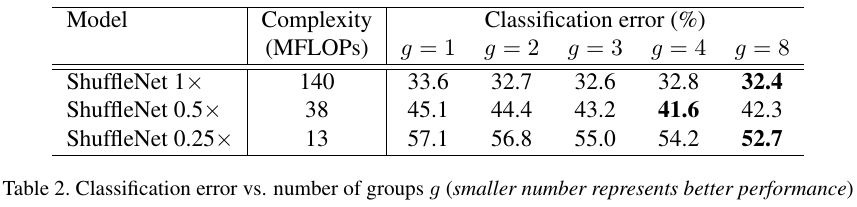

2.2. Shuffle Channel

- sx. means the scale of channel, sxs times complexity of 1x.

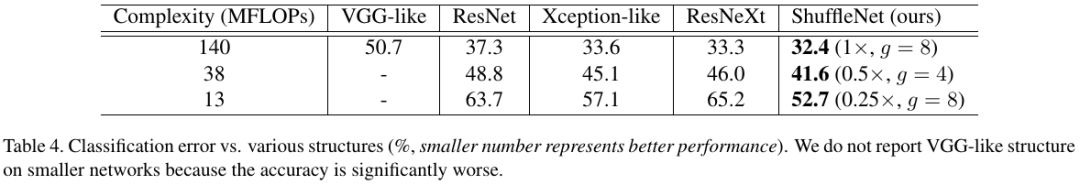

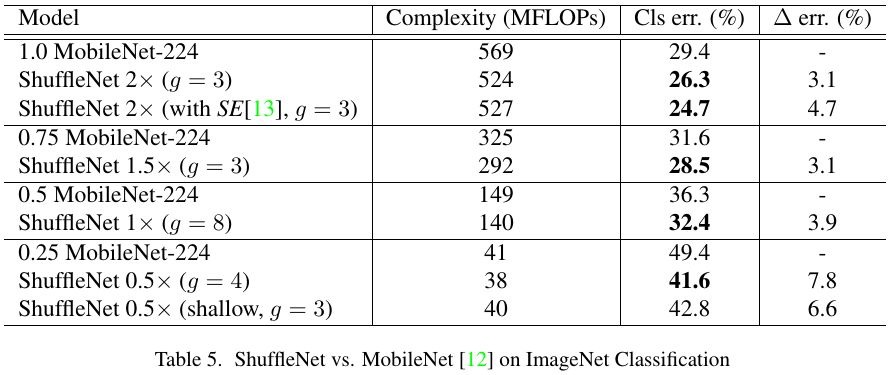

2.3. Comparison

- 18 times faster than AlexNet

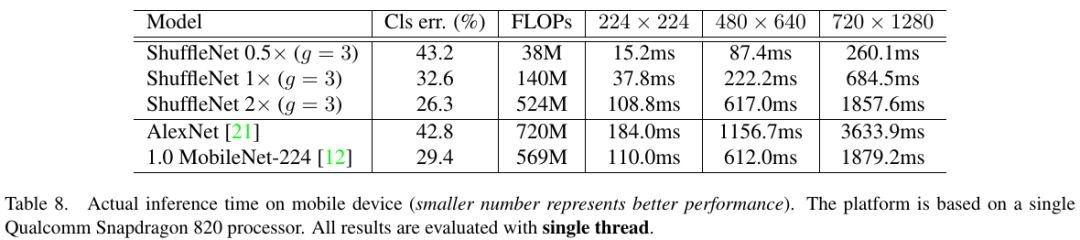

2.4. Inference Time on Mobile Devices

- Empirically g=3 has a proper trade-off between accuracy and actual inference time